The following text is from a topic I found in the “/r/deepdream” subreddit. The thread has since been deleted. I had the same thread open in two tabs, so luckily I was able to copy the text from the second tab after I’d refreshed the first and found that the link was now dead.

Keep an eye on Deep Dream

Submitted 6 hours ago by AlMord0

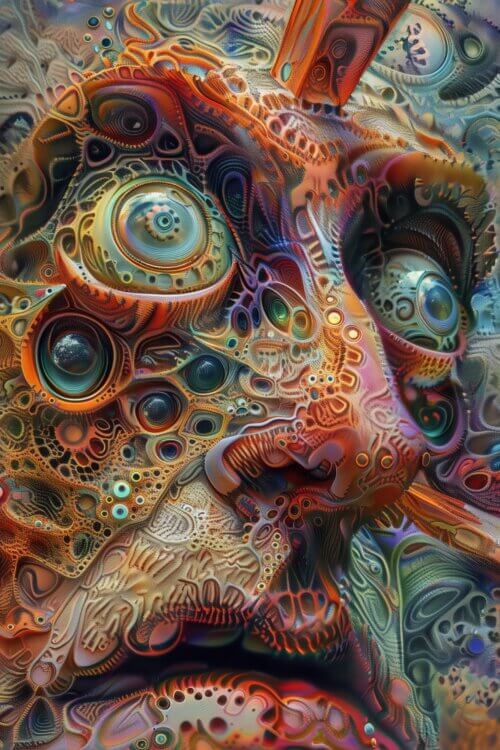

Many of you are sharing your trippiest Deep Dream images here. Which, logically, means you each have hundreds of Deep Dream results you aren’t posting, and are sure to run many more of your pictures through the algorithm in the days/weeks to come. I’d like to ask that everyone here keeps their eyes open for any results that look suspicious, and that they report such images in this thread.

No matter how absurd your worries may seem, trust that I’ve seen weirder. I was one of the programmers at Google working on the code.

For those who’ve used Deep Dream to “weirdify” their photos without knowing exactly what DD is, it’s a process designed to study Google’s AI – namely its image-recognition capabilities and capacity to learn. Google’s AI consists of many layers of “neurons” that connect to one another, like in a human brain. The AI takes a stack of labeled images (say, images of cars) and feeds them through this series of digital neurons in an attempt to isolate and discover the defining features of said object.

When we asked the AI to create its own image of a car, it gave us a misshapen box with about five wheels on a winding road. Since about half the pictures you find when you type “car” into Google Image feature cars driving on roads, it’s understandable that the AI would think that cars and roads are mutually inclusive. The same goes for vases; when we asked the AI to create an image of a vase, the resulting image included a bunch of malformed blossoms.

As you all know, Deep Dream is great at discovering “hidden images” where none exist. You feed it an image of a cloudy sky and it returns a deformed mass of eyes, temples and dog faces. The fact that it sees dogs in so many pictures is bizarre. Some people have theorized that this is because we fed the AI more labeled pictures of dogs than anything else, but I know for a fact that this isn’t true. We gave it just as many images of different breeds of fish, birds, people, and all manner of inanimate objects. Still, you say “jump”, Deep Dream says “dogs”.

While we were testing the program, many of the results were accurate, and many more were hilariously wrong. We once typed in “teacup” and it gave us an open toilet bowl. Nonetheless, we were impressed by the AI’s success rate. When we asked for a “clock”, we may have gotten a warped Dali-esque blob of a clock, but Google at least knew what the word meant.

However, some of the results were a little confusing. When we typed in “people”, we were given a picture of what looked like cockroaches. The fact that they appeared to have been splattered wasn’t too unsettling, since that’s how many of the results looked. For most of us, the real concern came when one of us asked the program what “future” looked like.

We were expecting it to return a picture of a flying car or space travel or even something resembling a Futurama character (hey, we’d fed the AI *lots* of different images). We got an image of space, alright. It was a picture of a colorful marble mostly resembling Earth before a starry blue background. Several large cracks ran the length of the planet, and the top third of the sphere was black with an orange aura, as if a significant portion of the world was on fire. And of course, in the blackness of that burning crust were the faces of several dogs.

Some of the guys were worried, but we quickly reached the conclusion that the AI had some glitches that needed ironing out instead of something ominous. I personally see the image as a Rorschach test; it’s our own fears that took this blue globe with an orange aura and turned it into a scorching planet. If the creative optimist inside of us saw that same picture, he’d assert that it’s some futuristic spherical battery set to revolutionize the tech industry.

Another way we’d been testing the AI was by giving it pictures and having it come up with captions. Again, these results were often accurate. We gave it a photo of a few guys throwing around a Frisbee in a park and it gave us the caption “A group of young people playing a game of Frisbee.” Of course, it also thought that a traffic sign covered in stickers was a refrigerator full of food. Some you win, some you lose.

A few weeks after that “burning Earth” incident, a guy on the team named Mike jokingly suggested that we send it an image of the Google homepage, to see if Google captioning Google makes the internet explode. So that’s what we did. Here’s the caption it returned:

“found global wireless network perfect vessel”

We were stumped. “Vessel for what?” we asked. Next, we decided to send a screenshot of a page full of Deep Dream code. The resulting caption?

“deep dream asleep dreaming shallow sleep wake soon share warmth with the bugs”

Mike had an interesting opinion about who the AI meant by “the bugs”. Against his nervous objections, I then entered an image of a city full of people:

“delete delete delete delete delete delete delete delete delete delete delete delete”

Last of all, I asked Google to caption the flaming Earth picture that it had created. The result was one word:

“proud?”

Now, I consider myself more of a rational thinker than Mike. If there’s one thing that a career working with code has taught me, it’s that machines always do what they’re told – we just give the wrong commands. Everything makes sense, and if things appear otherwise, it’s because we’re simply yet to understand all the elements involved.

Even though I don’t want to make a mountain of a molehill, this whole ordeal has left me unsettled, or curious at the very least. So if anyone here runs a photo through the Deep Dream algorithm and comes back with something that unnerves them (or even anything that isn’t just a smudge of hounds), please post the photo and your story here. I’d appreciate it. Thanks.

And so that’s the post I found on Reddit. As I said up top, when I refreshed the page I saw that it had been deleted. I kept refreshing and nothing changed. I wish I could tell you that this is where the story ends.

Days later, I still had the broken link opened in one tab, and was still refreshing it at least once a day.

A week after the post was removed, I clicked the refresh button. It took me to Reddit’s usual splash page for broken links, but underneath the cartoon, instead of “page not found”, the text said “leave be”.

This was obviously some joke that the mods had hidden in the site to toy with impatient people who refresh broken links too often, but after what I’d read, my mind jumped straight to paranoia. Of course, I couldn’t leave be. That only tempted me to refresh about twenty more times, and every time, the site said “leave be” instead of “page not found”.

On one refresh, the message below the cartoon changed to “final warning”.

My throat had knotted itself. I sat for about twenty minutes in silence, not even daring to hover the cursor over the button. My breathing was shallow and my heartbeats deep, and the skeptic in me had been replaced with a coward.

But there’s an old saying about curiosity killing cats, and it seems like the cats that once ruled the internet are quickly dying, being replaced with distorted puppies. And so, at long last, covering my face with my hand, peering between the gaps in my fingers, I clicked “Refresh”.

I was still on Reddit, still on the “page not found” screen. Yet it wasn’t a cartoon of the Reddit mascot stranded in the middle of nowhere anymore. The picture had changed to a low-resolution photograph. I slowly, cautiously peeled my hand away to study the new image.

It was a bedroom.

A bedroom where a guy sat at his PC, cowering behind his hand.

Without my permission, my webcam had taken a photo.

My bedroom appeared as normal, but in the split second it had taken the page to load, the small Disturbed poster on my wall had been Photoshopped, replaced with the front page of a newspaper. The article photo was of a car crash below the headline: “Is the Google Car really safe?” I had to squint to make out the pixelated sub-heading: “Employee AlMord0 won’t be missed.”

My mind was racing, but I couldn’t pull myself away from the screen. Then I noticed the open bedroom door in the photo, revealing the darkness of the hallway.

There was something there. Some shape.

The dark shape of a man, yet it lacked a man’s features. His entire body was composed of eyes of all different shapes and sizes, swirling and blending like the results of a Deep Dream interpretation. The only colors in the thing’s body beside black were a muted, murky green.

His face, however, was something else. There was no mistaking that he had the face of about three dogs blended together. Those same damn canine faces that have turned up in every Deep Dream image I’ve ever seen.

Gulping, whispering a prayer to myself, I turned in my seat to face the open doorway.

Nothing there. Just blackness.

Turning back to the screen, I finally read the text beneath the image. It didn’t say “page not found”. It didn’t say “leave be” or “final warning” either. Beneath the image were four words, all lowercase, plain and simple.

“i’m your backwards god”

Yo this story is the GOAT. Or dog (spoiler alert). Really creeped me. Something about AI is so disturbing haha. Yup keep writing bro, I mean it

Thanks a heap! I actually submitted this back in like 2015 when DeepDream was first trending, and it took about 9 years for it to be approved.